- Studio format

- Get surface textures you can’t get with traditional volumetric studios

- Covering a wide area with a small number of cameras by introducing robot cameras

- Expansion point cloud and data processing speed

- Research and development is underway aiming for the broadcast usage phase around 2025

In May 2022, I visited NHK Science & Technology Research Laboratories (STRL) at the STRL Open House 2022, and in January 2023 I was able to visit Meta Studio again and hear about its evolution since then.

Studio format

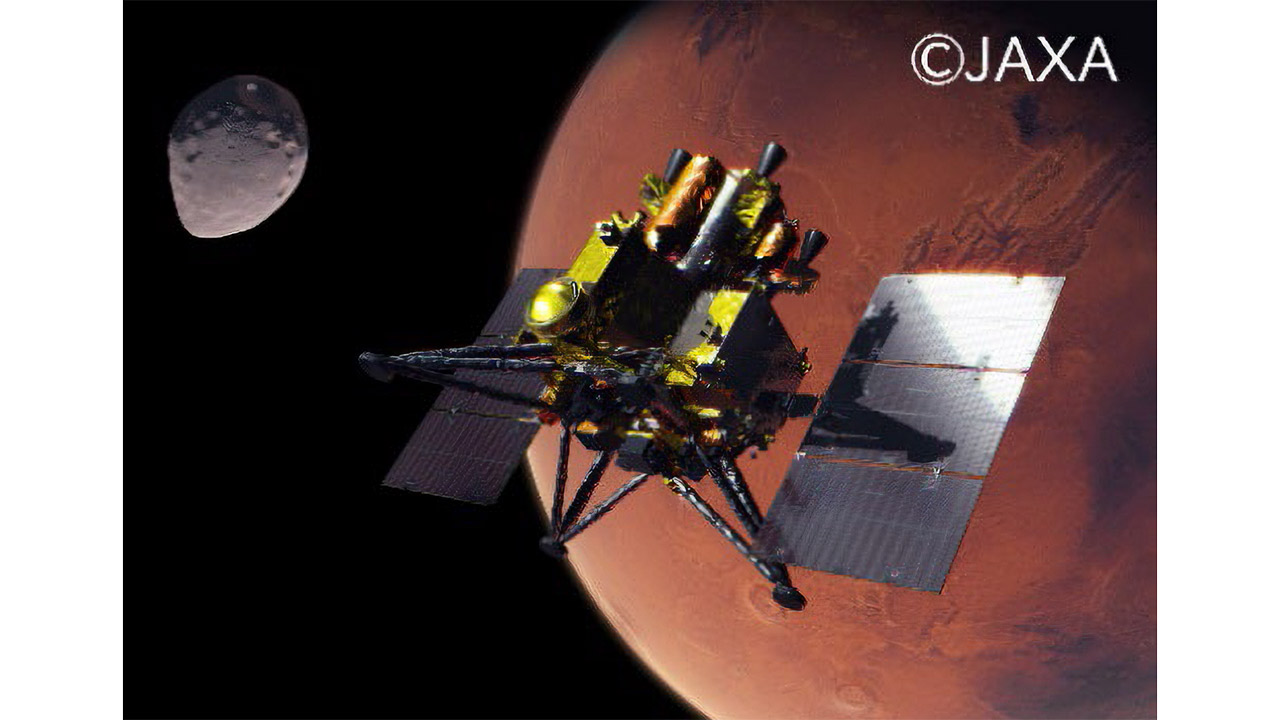

First of all, I would like to clarify that STRL’s meta studio is not a commercially open studio. This studio is a research and development base, and even if content creators want to use it, it does not support shooting for external projects. As the research and development department of NHK, an organization that provides public broadcasting services, we will research volumetric video, which can be one of the next-generation video formats, and build a studio format that can be used in Japan and overseas in the future. has become a major goal.

Therefore, the direction of technology development related to volumetric capture is more suitable for research and academic value rather than improving quality and improving the efficiency and speed of data processing with an awareness of commercial feasibility.

Get surface textures you can’t get with traditional volumetric studios

STRL’s Meta Studio differs from volumetric capture studios of other companies in that it not only acquires 3D shapes, but also acquires surface light fields (light ray information from the subject surface) and texture information (diffuse reflectance, specular reflectance, surface roughness).

By acquiring these data, it is possible to express more photorealistic textures, so in the demo, the texture of the human subject was changed to plaster or metallic.

In volumetric capture, since data from multiple cameras are integrated in the post-processing, lighting conditions for the entire surroundings of the subject are often kept constant at the time of shooting, making it difficult to produce effects with lighting. However, by acquiring such a surface light field, it becomes possible to change the lighting and texture.

Specifically, a reflection coefficient was added to the texture of the 3D model, and light reflection such as diffuse reflection and specular reflection could be realized. For example, when light hits human hair, an anisotropic reflection that shines like an angel’s ring occurs, and it is said that such a reflection can be expressed.

Covering a wide area with a small number of cameras by introducing robot cameras

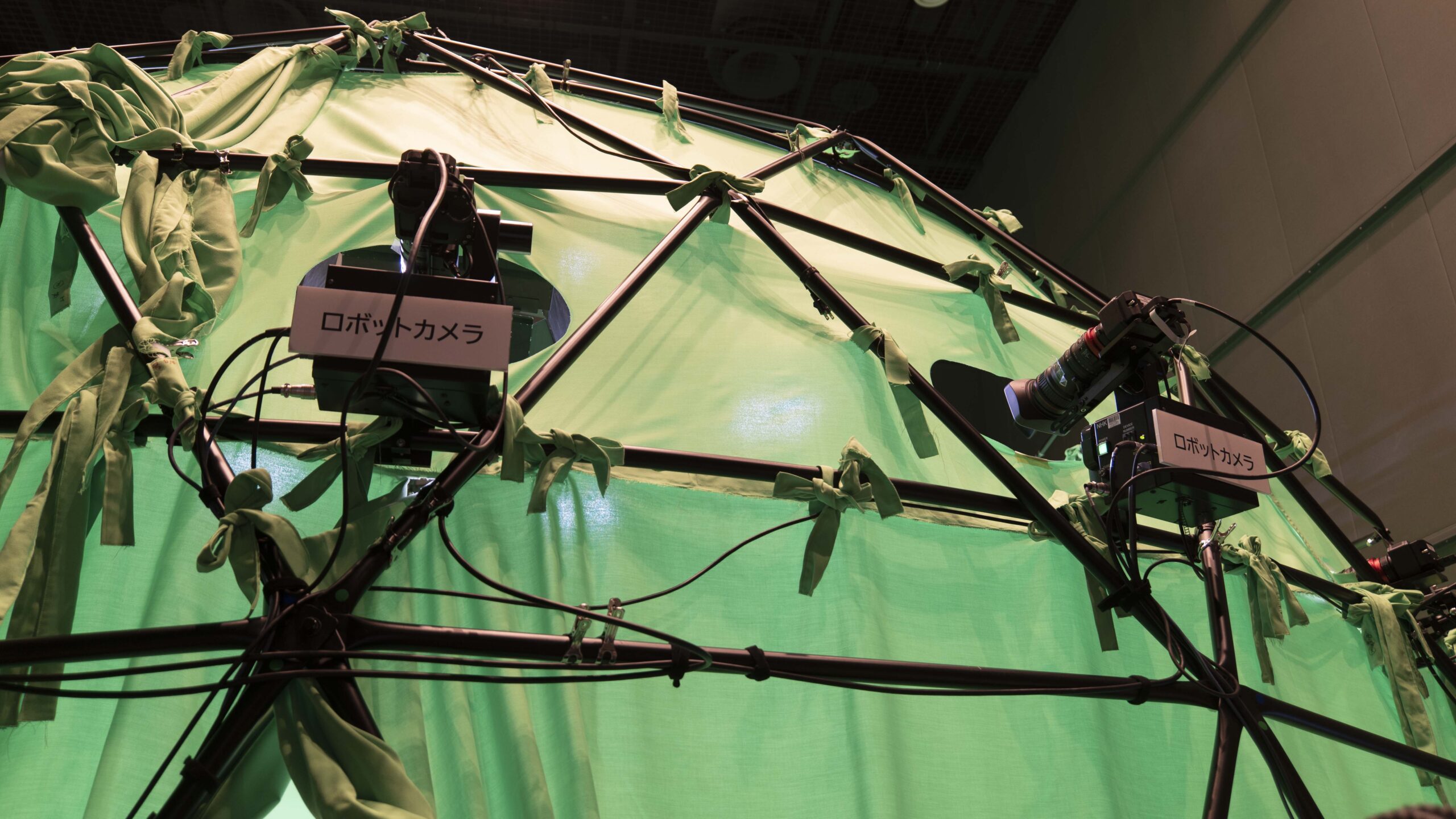

Another feature is that the number of cameras used for shooting is 26, which is small, compared to other volumetric studios that use nearly 100 cameras. This is because each camera is a robot camera that can pan, tilt, and zoom, and it has the advantage of improving resolution and expanding the shooting area by moving the camera side according to the size and movement of the subject.

The camera used for volumetric capture uses a method called stereo matching to reconstruct 3D images from a large amount of 2D video, and for that reason, it is necessary to align the camera and lens positions and settings strictly. .

For example, if one camera is misaligned or out of focus, it will adversely affect subsequent image processing. In an environment that requires strict camera installation and fixation, moving the camera is a challenging undertaking.

Expansion point cloud and data processing speed

The data generated by MetaStudio is point cloud data called extended point cloud. Many commercial volumetric capture studios also provide meshed 3D models as delivery data, but Meta Studio uses point cloud data. In the process of meshing the data, each company uses its own original algorithm to compete for high image quality. It is limited to generation of point cloud data, which will become a standard as a future data format.

The current Meta Studio data processing takes more than 10 minutes to create one frame, but this is the performance when processing with the CPU, and we are trying to speed it up by using GPU and FPGA processing. It seems that it will continue. If real-time processing becomes possible by combining hardware speed-up with software speed-up such as algorithm, it will be possible to use it for live streaming, etc., and the range of applications of Meta Studio is likely to increase.

Research and development is underway aiming for the broadcast usage phase around 2025

In this way, STRL’s Meta Studio is conducting research and development to develop a studio format that can be used in the long term, rather than aiming for short-term commercialization. When asked about their plans for the future, they plan to improve the quality of data and speed up processing so that they can move from the research stage to the broadcast use phase around 2025, and they are currently shooting with a green screen dome. It seems that the idea was to create an environment that would make it easier for the performers to perform by making it like an LED dome.

Takayuki Aoki|Profile

Kadinche Co., Ltd. CEO

In 2009, received a Ph.D. (policy and media) from Keio University. After working for Sony Corporation, he established Kadinche Corporation. Engaged in software development related to XR at Kadinche. In 2018, he established Miecle Co., Ltd., a joint venture with Shochiku Co., Ltd., and opened Daikanyama Metaverse Studio in January 2022 to work on content production using virtual production methods.