Crescent’s Digi-Cast 2nd studio

- What is volumetric capture and volumetric video?

- Realize 360° 3D volume video shooting

- Making imaging equipment more compact and cost-effective, making it more familiar

What is volumetric capture and volumetric video?

Volumetric capture is a method of shooting and measuring a subject in 3D, like a moving image. The data created by volumetric capture is called volumetric video.

A photograph is a 2D still image, and a video is a 2D still image with data in the time direction. Is it possible to convey that it is a cutting-edge area as technology? Both in Japan and overseas, research and development of filming methods (capturing), usage methods (as 2D video and 3D data), and transmission methods (sending/receiving, compression) and studios are being established.

Realize 360° 3D volume video shooting

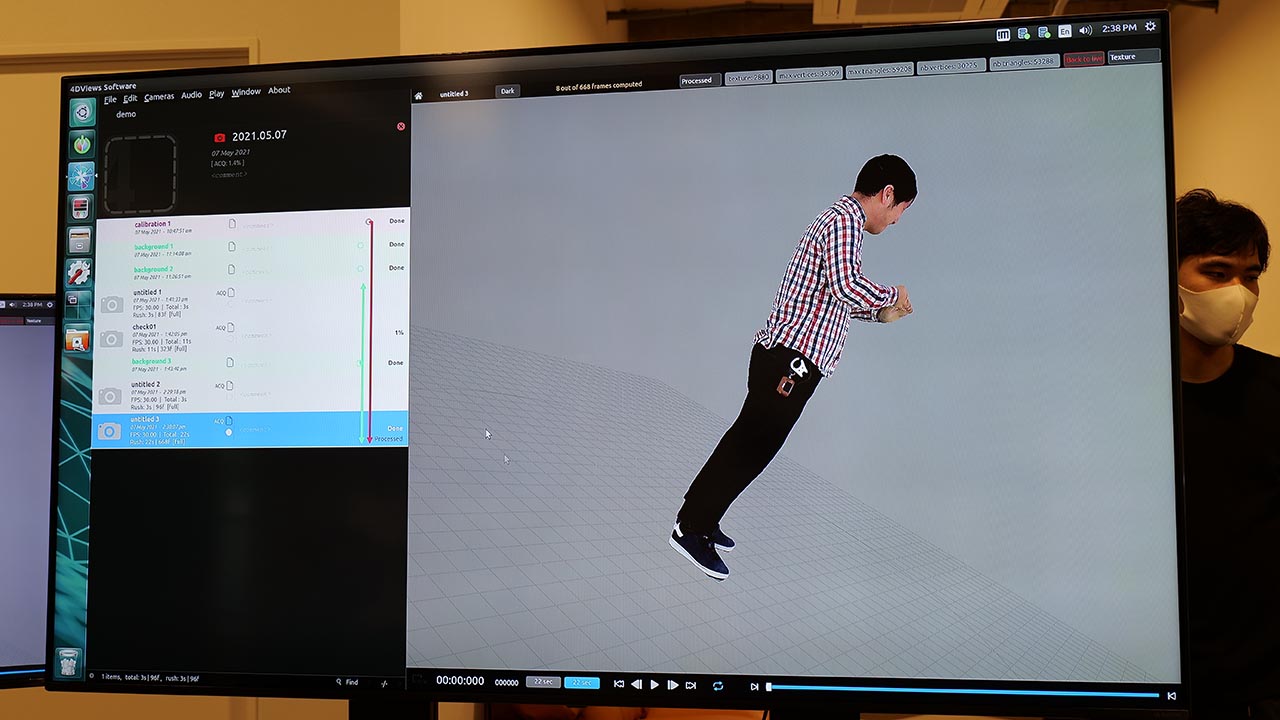

In most volumetric studios that perform volumetric capture, multiple cameras (several to hundreds) are installed to surround the subject 360°. Dozens to 100 or more cameras are precisely synchronized, and machine vision cameras with global shutters are often used. A volumetric video is created by capturing a moving subject with these cameras and reconstructing it in 3D through real-time processing and post-processing based on the captured 2D video.

Creating a volumetric video is to create the original 3D image of the subject from multiple 2D moving images, and is called a 3D reconstruction method. Many volumetric studios in Japan use the visual volume intersection method and the stereo method using RGB cameras. Some also use a sensor called a depth sensor that can measure the distance from the camera to the subject. With a depth sensor, there is a limit to the distance that the sensor can measure, so the studio has to be small, and for that reason, RGB cameras are often used.

There are two types of 3D data that can be created: point cloud data, which is a large amount of point clouds, and mesh data, which connects points to form rectangles or planes. Since a point cloud is a collection of points, when the data is expanded, gaps will occur between the points, but in the case of a mesh, such a thing does not occur. On the other hand, in the case of meshes, even if the data is enlarged, gaps do not occur, but since each mesh is composed of rectangles that are not curved surfaces, the data may look angular. . In either case, if the amount of data and resolution is sufficient, you won’t notice it so much, but you need to be careful when enlarging.

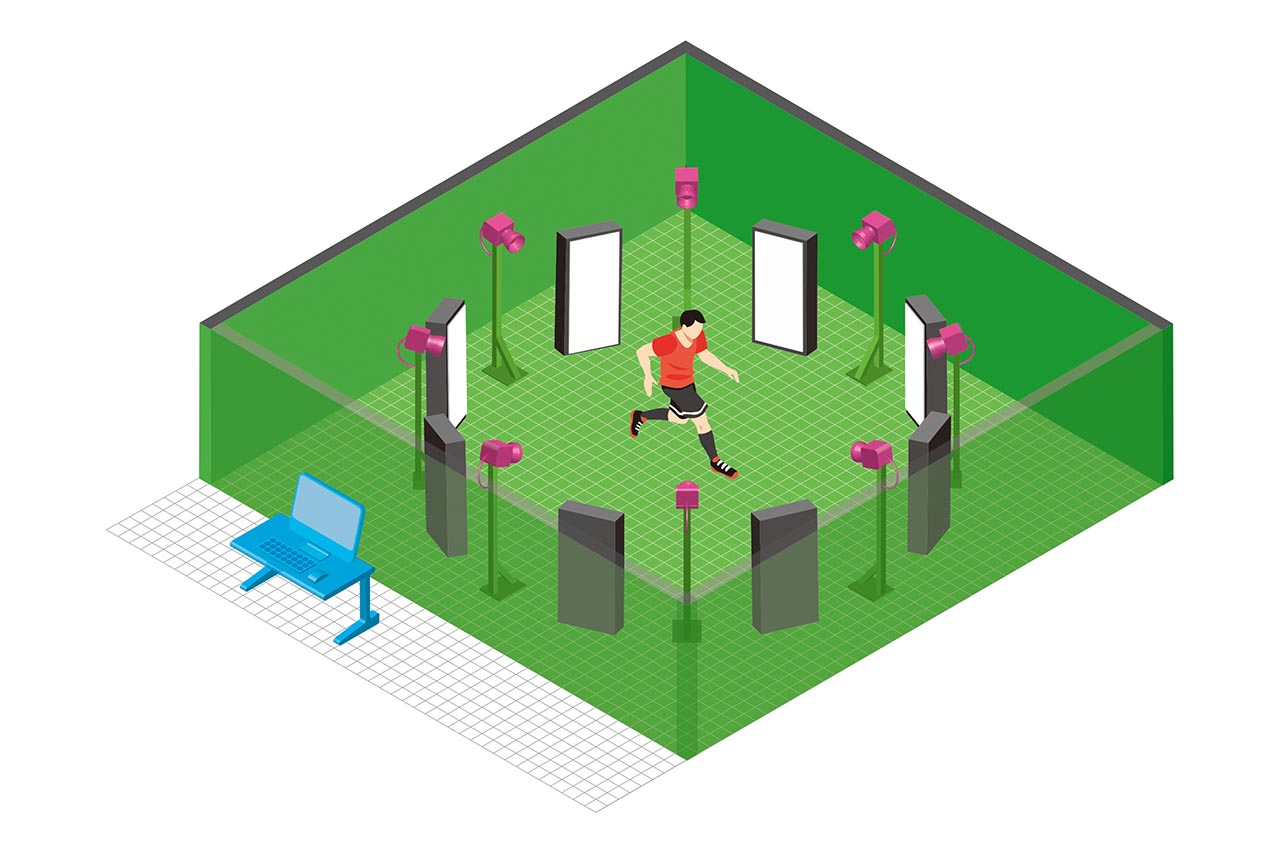

■ Volumetric studio configuration example

- Shoot the subject from 360 degrees (sometimes more than 100 cameras)

- Lighting to evenly illuminate the subject

- Images are recorded synchronously from each camera, and point clouds and meshes are processed at multiple workstations in the sub-studio.

By generating volumetric video, it is possible to change the camera work and camera angle of the subject once shot and export it as a 2D video sometimes called free-viewpoint video. It can also be used as 3D content that can be seen from the surroundings by placing it in a 3D metaverse space or combining it with AR or MR.

A green screen is often used for the background, and by arranging the 3D data in a different space after shooting, it is possible to create images as if they were shot in a different space, so it is sometimes positioned as a method of virtual production.

Three typical methods of virtual production, green screen, LED wall, and volumetric capture, are summarized below. While the final product of the former two is 2D video, volumetric capture is characterized by the ability to generate 2D video + 3D data.

Making imaging equipment more compact and cost-effective, making it more familiar

Volumetric data can be used in two ways: 2D video used as free-viewpoint video and 3D data used in 3D space such as metaverse. Each studio also changes the rendering method according to the final data format to create higher quality and more natural images, but one of the major issues is the lighting problem. Whether it’s human skin or the texture of clothing, the color and appearance will change depending on the sunlight and lighting, and the light will be reflected depending on the material.

However, in many existing volumetric capture methods, the lighting at the time of shooting often illuminates the subject uniformly, and the resulting data is a uniformly bright 3D model. For example, if you place this data in a dark metaverse environment, it will feel strange. One of the methods for solving such problems is rewriting technology.

The following video introduces Google’s volumetric capture using light field technology, and Google presents a solution to the above lighting problem in volumetric capture. By developing a volumetric capture device using special lights, we are able to relight the volumetric video in post-processing.

Also, currently, it is only possible to shoot in a volumetric studio with a large-scale shooting device, but as the shooting device becomes smaller and more portable, the cost of shooting will go down, and more volumetric data will increase. Expected.

In addition to being used for large-scale entertainment content, it is conceivable that user-generated volumetric videos will appear on video platforms such as YouTube and TikTok, and metaverse platforms such as VRChat and Roblox.

In the same way that all video technologies have evolved to enable relaying and live distribution, volumetric video will also enable live streaming. The technical challenges are the compression and encoding technology for volumetric video, which tends to have a large amount of data, the development of encoders and decoders that support them, and the rendering technology that can display that data at a high frame rate. become. Many research institutes and manufacturers are developing these technologies, so I expect that they will be democratized in the next two to three years.

In this way, the future of volumetric capture will also depend on how much the need for volumetric video will increase in the form of 2D and 3D content. Especially in the 3D industry, not only the game industry, which has existed as a large market until now, but also the metaverse industry, which is content for VR and MR head-mounted displays, has been born. Demand for live-action-based 3D content, as well as created content, will increase. Volumetric video will probably be one of the main players in that live-action-based 3D content.

Originally written in Japanese by Takayuki Aoki

Takayuki Aoki|Profile

CEO of Kadinche Co., Ltd. In 2009, received a Ph.D. (policy and media) from Keio University. After working for Sony Corporation, he established Kadinche Corporation. Engaged in software development related to XR at Kadinche. In 2018, he established Miecle Co., Ltd., a joint venture with Shochiku Co., Ltd., and opened Daikanyama Metaverse Studio in January 2022 to work on content production using virtual production methods.